性能优化首先要确定关键指标,这些指标通常与延迟时间和吞吐量相关。添加用于捕获和跟踪这些指标的监控功能,可以揭示应用中的薄弱环节。借助指标,您可以进行优化以提升效果指标。

此外,许多监控工具都允许您为指标设置提醒,以便在指标达到特定阈值时收到通知。例如,您可以设置提醒,以便在失败请求百分比增加超过正常水平的 x% 时收到通知。监控工具可帮助您确定正常性能,并发现延迟时间、错误数量和其他关键指标的异常峰值。在业务关键时间段或将新代码推送到生产环境后,能够监控这些指标尤为重要。

确定延迟时间指标

请确保界面尽可能具有较高的响应速度,请注意,用户对移动应用的预期标准更高。还应衡量和跟踪后端服务的延迟时间,尤其是因为如果不加以控制,延迟时间可能会导致吞吐量问题。

建议跟踪的指标包括:

- 请求时长

- 子系统级别(例如 API 调用)的请求时长

- 作业时长

确定吞吐量指标

吞吐量是衡量给定时间段内处理的请求总数的指标。吞吐量可能会受到子系统延迟时间的影响,因此您可能需要针对延迟时间进行优化以提高吞吐量。

以下是一些建议跟踪的指标:

- 每秒查询次数

- 每秒传输的数据量

- 每秒 I/O 操作数

- 资源利用率,例如 CPU 或内存用量

- 处理积压的大小,例如 pub/sub 或线程数

而不仅仅是平均值

衡量性能时常见的错误是仅查看平均值。虽然这很有用,但无法深入了解延迟时间的分布情况。更好的跟踪指标是效果百分位数,例如指标的 50 分位数/75 分位数/90 分位数/99 分位数。

通常,优化可分为两个步骤。首先,针对第 90 百分位延迟时间进行优化。然后,考虑第 99 百分位延迟时间(也称为尾延迟时间):完成所需时间较长的一小部分请求。

服务器端监控以获取详细结果

通常,对于跟踪指标,首选服务器端性能分析。服务器端通常更容易插桩,可访问更精细的数据,并且不易受到连接问题的干扰。

浏览器监控,实现端到端可见性

浏览器性能分析可以提供有关最终用户体验的更多数据洞见。 它可以显示哪些网页的请求速度缓慢,然后您可以将这些网页与服务器端监控相关联,以进行进一步分析。

Google Analytics 在网页加载时间报告中提供开箱即用的网页加载时间监控功能。这提供了几个实用视图,可帮助您了解网站上的用户体验,尤其是:

- 网页加载时间

- 重定向加载时间

- 服务器响应时间

Cloud 中的监控功能

您可以使用多种工具来捕获和监控应用的性能指标。例如,您可以使用 Google Cloud Logging 将性能指标记录到 Google Cloud 项目中,然后在 Google Cloud Monitoring 中设置信息中心,以监控和细分已记录的指标。

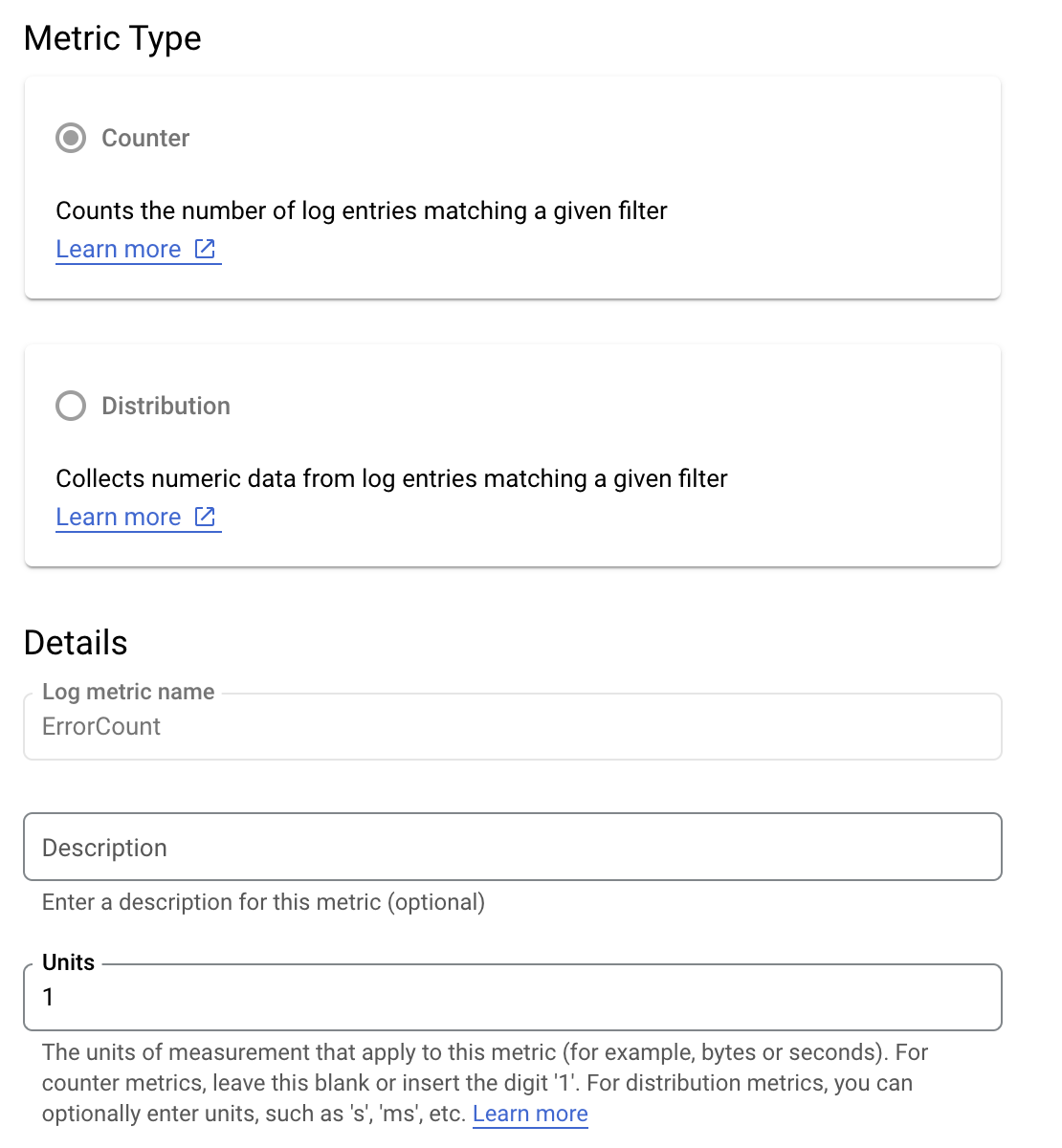

如需查看从 Python 客户端库中的自定义拦截器向 Google Cloud Logging 记录日志的示例,请参阅日志记录指南。在 Google Cloud 中获取这些数据后,您可以基于日志数据构建指标,以便通过 Google Cloud Monitoring 了解应用的运行情况。按照用户定义的基于日志的指标指南中的说明,使用发送到 Google Cloud Logging 的日志构建指标。

或者,您也可以使用 Monitoring 客户端库在代码中定义指标,并将其直接发送到 Monitoring,而不会与日志混淆。

基于日志的指标示例

假设您想监控 is_fault 值,以便更好地了解应用中的错误率。您可以将日志中的 is_fault 值提取到新的计数器指标 ErrorCount 中。

在 Cloud Logging 中,您可以使用标签根据日志中的其他数据将指标分组到类别中。您可以为发送到 Cloud Logging 的 method 字段配置标签,以查看错误数是如何按 Google Ads API 方法细分的。

配置 ErrorCount 指标和 Method 标签后,您可以在 Monitoring 信息中心内创建新图表,按 Method 分组监控 ErrorCount。

提醒

您可以在 Cloud Monitoring 和其他工具中配置提醒政策,指定指标应在何时以及如何触发提醒。如需了解如何设置 Cloud Monitoring 提醒,请参阅提醒指南。