Page Summary

-

The Scene Semantics API provides real-time, ML model-based semantic information about the scene surrounding the user in outdoor environments.

-

The API returns a semantic image with labels for each pixel and a corresponding confidence image indicating the probability of each label.

-

You can check if a device supports the Scene Semantics API and must enable semantic mode in the ARCore session configuration to use it.

-

You can retrieve the semantic and confidence images using

Frame.acquireSemanticImage()andFrame.acquireSemanticConfidenceImage(). -

A more efficient way to check for the prevalence of a semantic label is by querying the fraction of pixels belonging to that class using

Frame.getSemanticLabelFraction().

Learn how to use the Scene Semantics API in your own apps.

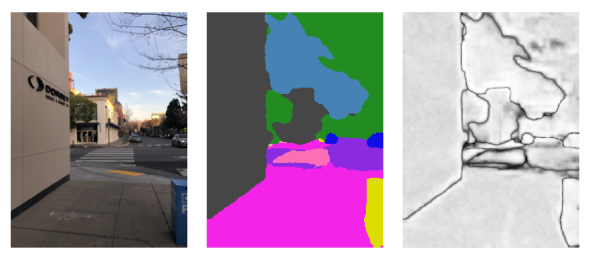

The Scene Semantics API enables developers to understand the scene surrounding the user, by providing ML model-based, real-time semantic information. Given an image of an outdoor scene, the API returns a label for each pixel across a set of useful semantic classes, such a sky, building, tree, road, sidewalk, vehicle, person, and more. In addition to pixel labels, the Scene Semantics API also offers confidence values for each pixel label and an easy-to-use way to query the prevalence of a given label in an outdoor scene.

From left to right, examples of an input image, the semantic image of pixel labels, and the corresponding confidence image:

Prerequisites

Make sure that you understand fundamental AR concepts and how to configure an ARCore session before proceeding.

Enable Scene Semantics

In a new ARCore session, check whether a user's device supports the Scene Semantics API. Not all ARCore-compatible devices support the Scene Semantics API due to processing power constraints.

To save resources, Scene Semantics is disabled by default on ARCore. Enable semantic mode to have your app use the Scene Semantics API.

Java

Config config = session.getConfig(); // Check whether the user's device supports the Scene Semantics API. boolean isSceneSemanticsSupported = session.isSemanticModeSupported(Config.SemanticMode.ENABLED); if (isSceneSemanticsSupported) { config.setSemanticMode(Config.SemanticMode.ENABLED); } session.configure(config);

Kotlin

val config = session.config // Check whether the user's device supports the Scene Semantics API. val isSceneSemanticsSupported = session.isSemanticModeSupported(Config.SemanticMode.ENABLED) if (isSceneSemanticsSupported) { config.semanticMode = Config.SemanticMode.ENABLED } session.configure(config)

Obtain the semantic image

Once Scene Semantics is enabled, the semantic image can be retrieved. The semantic image is a ImageFormat.Y8 image, where each pixel corresponds to a semantic label defined by SemanticLabel.

Use Frame.acquireSemanticImage() to acquire the semantic image:

Java

// Retrieve the semantic image for the current frame, if available. try (Image semanticImage = frame.acquireSemanticImage()) { // Use the semantic image here. } catch (NotYetAvailableException e) { // No semantic image retrieved for this frame. // The output image may be missing for the first couple frames before the model has had a // chance to run yet. }

Kotlin

// Retrieve the semantic image for the current frame, if available. try { frame.acquireSemanticImage().use { semanticImage -> // Use the semantic image here. } } catch (e: NotYetAvailableException) { // No semantic image retrieved for this frame. }

Output semantic images should be available after about 1-3 frames from the start of the session, depending on the device.

Obtain the confidence image

In addition to the semantic image, which provides a label for each pixel, the API also provides a confidence image of corresponding pixel confidence values. The confidence image is a ImageFormat.Y8 image, where each pixel corresponds to a value in the range [0, 255], corresponding to the probability associated with the semantic label for each pixel.

Use Frame.acquireSemanticConfidenceImage() to acquire the semantic confidence image:

Java

// Retrieve the semantic confidence image for the current frame, if available. try (Image semanticImage = frame.acquireSemanticConfidenceImage()) { // Use the semantic confidence image here. } catch (NotYetAvailableException e) { // No semantic confidence image retrieved for this frame. // The output image may be missing for the first couple frames before the model has had a // chance to run yet. }

Kotlin

// Retrieve the semantic confidence image for the current frame, if available. try { frame.acquireSemanticConfidenceImage().use { semanticConfidenceImage -> // Use the semantic confidence image here. } } catch (e: NotYetAvailableException) { // No semantic confidence image retrieved for this frame. }

Output confidence images should be available after about 1-3 frames from the start of the session, depending on the device.

Query the fraction of pixels for a semantic label

You can also query the fraction of pixels in the current frame that belong to a particular class, such as sky. This query is more efficient than returning the semantic image and performing a pixel-wise search for a specific label. The returned fraction is a float value in the range [0.0, 1.0].

Use Frame.getSemanticLabelFraction() to acquire the fraction for a given label:

Java

// Retrieve the fraction of pixels for the semantic label sky in the current frame. try { float outFraction = frame.getSemanticLabelFraction(SemanticLabel.SKY); // Use the semantic label fraction here. } catch (NotYetAvailableException e) { // No fraction of semantic labels was retrieved for this frame. }

Kotlin

// Retrieve the fraction of pixels for the semantic label sky in the current frame. try { val fraction = frame.getSemanticLabelFraction(SemanticLabel.SKY) // Use the semantic label fraction here. } catch (e: NotYetAvailableException) { // No fraction of semantic labels was retrieved for this frame. }