Page Summary

-

The Augmented Faces API allows rendering assets on human faces without specialized hardware by providing feature points to identify regions of a face.

-

This API enables various face-based AR use cases like try-ons, filters, and effects.

-

An Augmented Face consists of a center pose, three region poses, and a 468-point 3D face mesh, which help in placing assets accurately on the face.

Platform-specific guides

Android (Kotlin/Java)

Android NDK (C)

Unity (AR Foundation)

iOS

Unreal Engine

The Augmented Faces API allows you to render assets on top of human faces without using specialized hardware. It provides feature points that enable your app to automatically identify different regions of a detected face. Your app can then use those regions to overlay assets in a way that properly matches the contours of an individual face.

Use cases

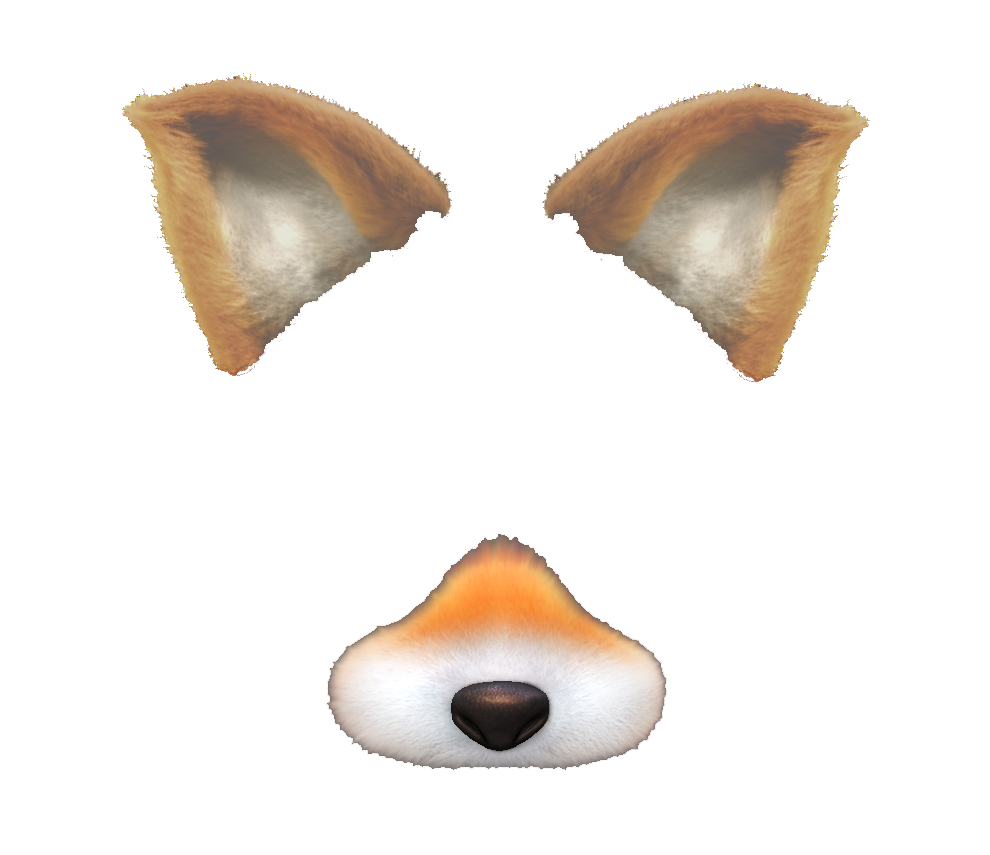

Face-based AR unlocks a wide array of use cases, from beauty and accessory try-ons to facial filters and effects that users can enjoy with their friends. For example, use 3D models and a texture to overlay the features of a fox onto a user's face.

The model consists of two fox ears and a fox nose. Each is a separate bone that can be moved individually to follow the facial region they are attached to.

The texture consists of eye shadow, freckles, and other coloring.

During runtime, the Augmented Faces API detects a user’s face and overlays both the texture and the models onto it.

Parts of an Augmented Face

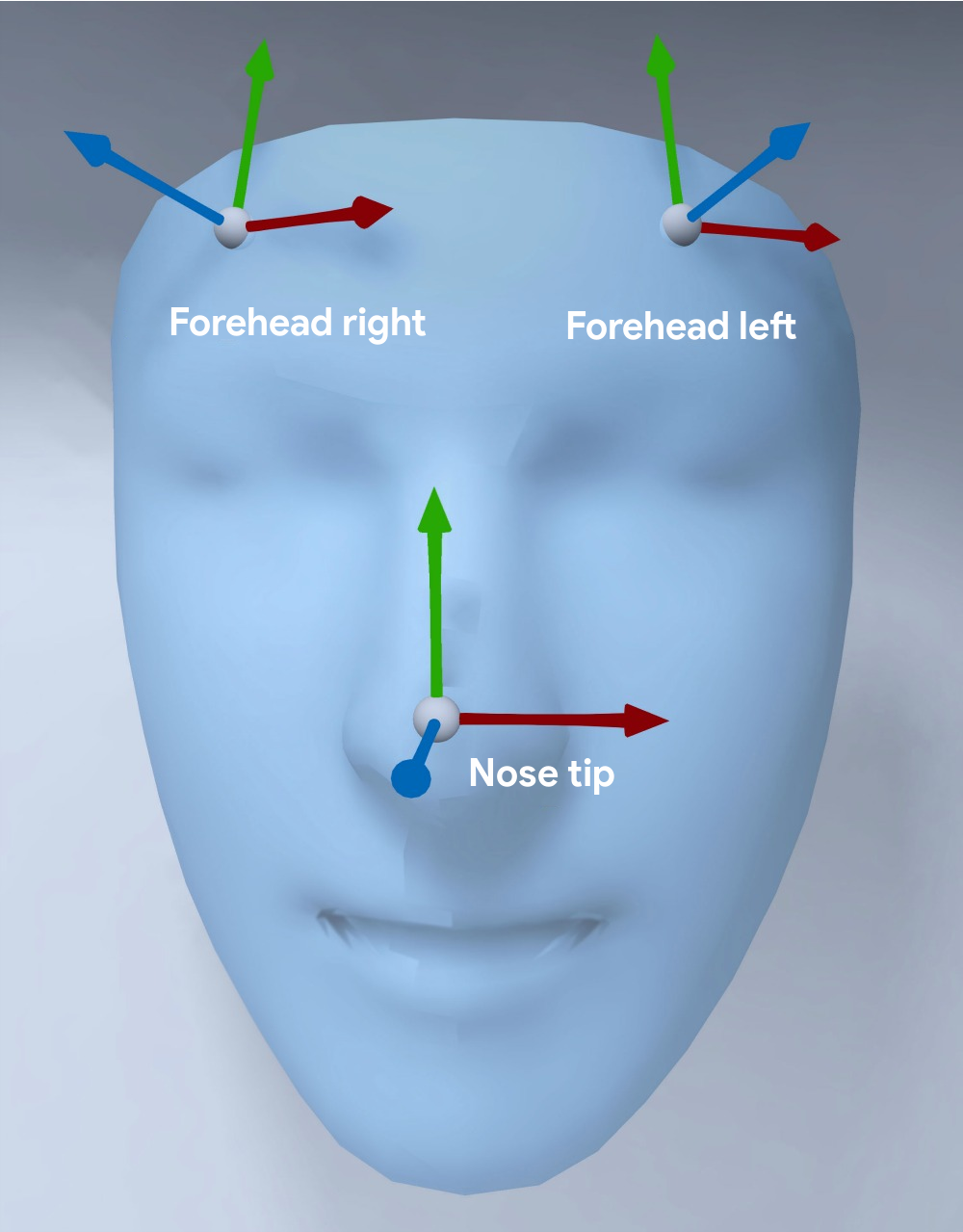

The Augmented Faces API provides a center pose, three region poses, and a 3D face mesh.

Center pose

Located behind the nose, the center pose marks the middle of a user’s head. Use it to render assets such as a hat on top of the head.

Region poses

Located on the left forehead, right forehead, and tip of the nose, region poses mark important parts of a user’s face. Use them to render assets on the nose or around the ears.

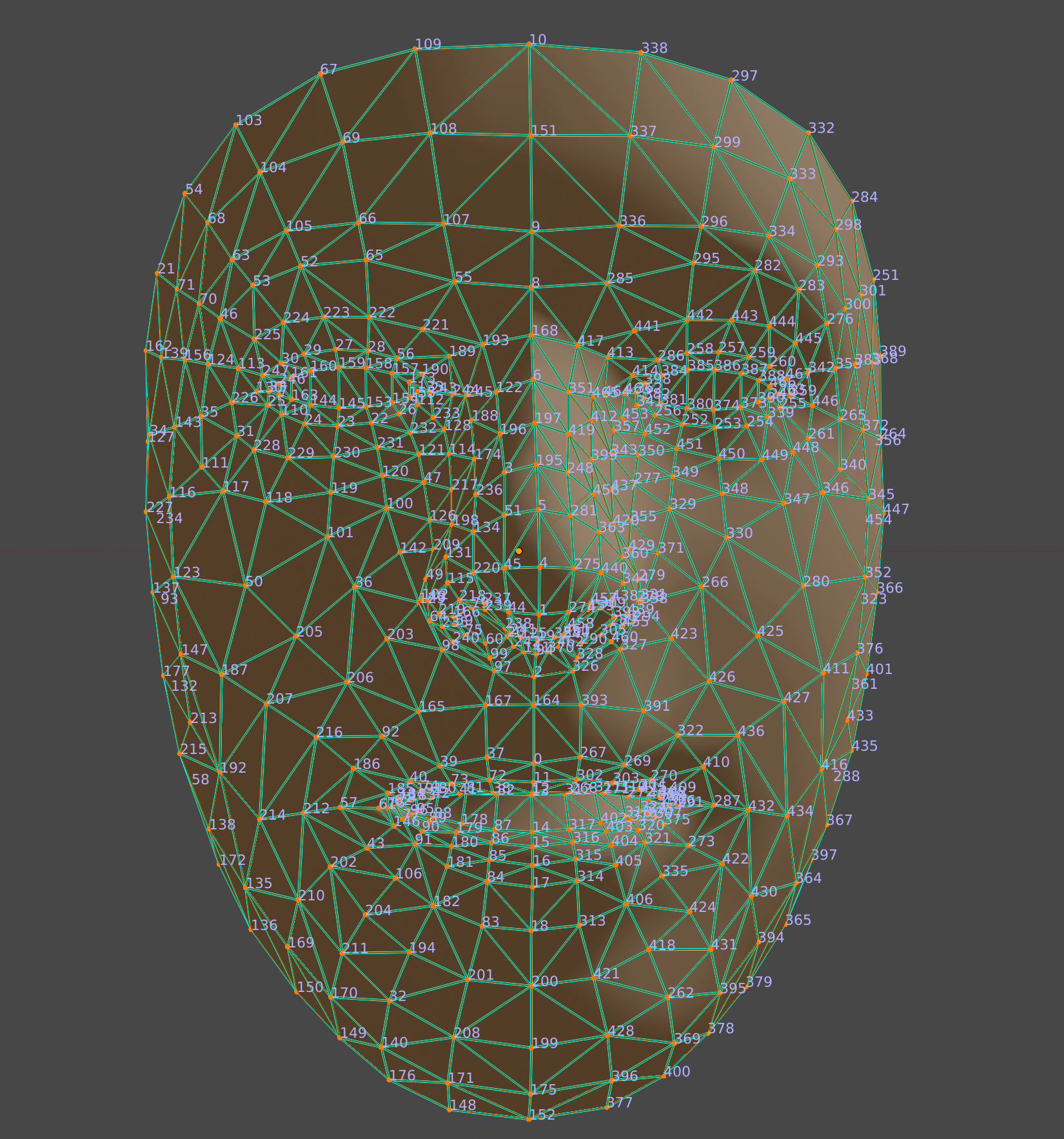

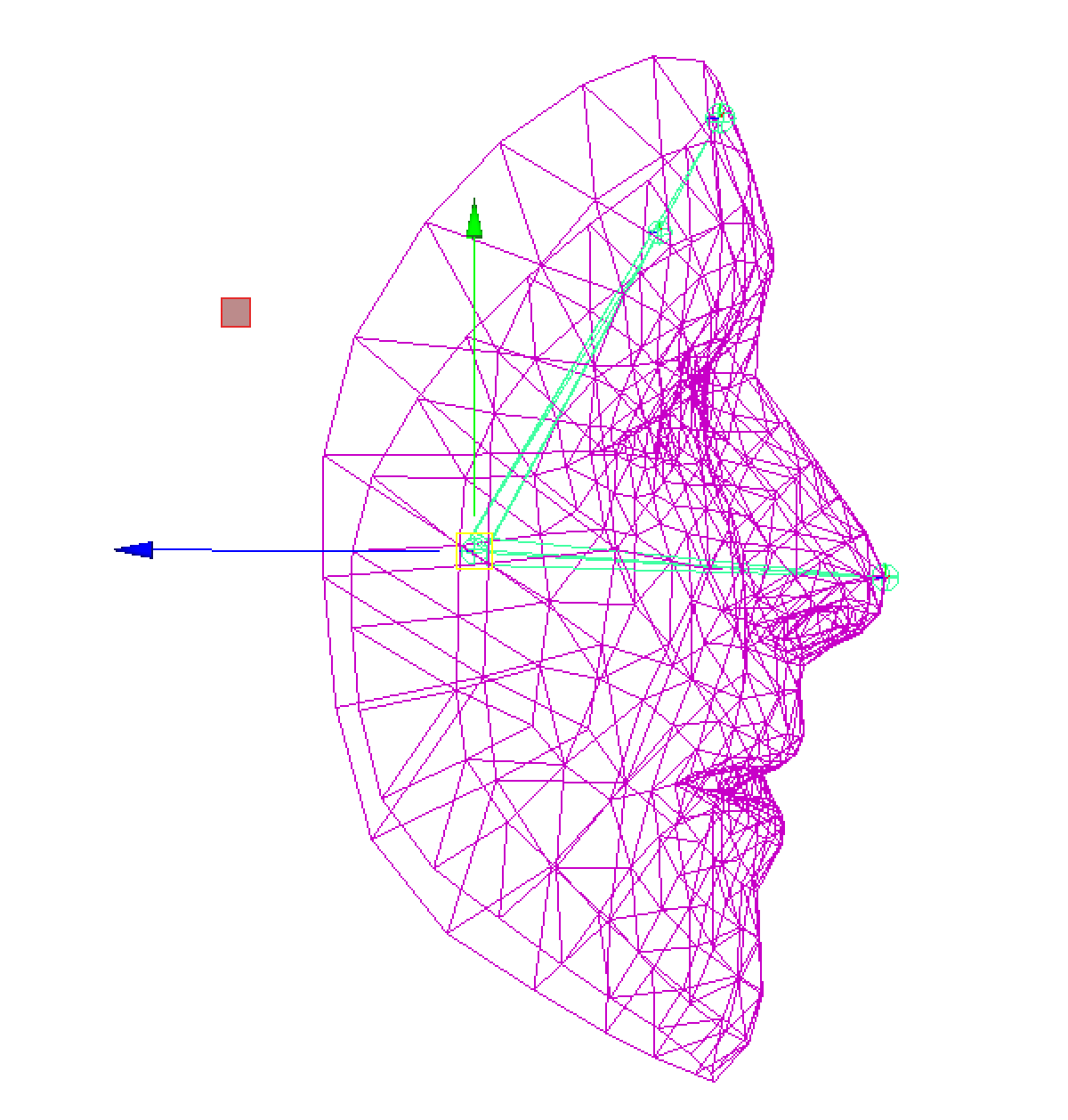

Face mesh

The 468-point dense 3D face mesh allows you to paint adaptable, detailed textures that accurately follow a face — for example, when layering virtual glasses behind a specific part of the nose. The mesh gathers enough detailed 3D information that you can easily render this virtual image.