The Google Meet Media API lets you access real-time media from Google Meet conferences. This enables a variety of use cases, such as apps that document action items, offer real-time insights about the current meeting, or stream audio and video to a new surface.

Use cases

Apps registered in the Google Cloud console can use the Meet Media API to connect to Meet conferences, enabling them to:

- Consume video streams. For example:

- Feed video streams generated in Meet conferences into your own AI models.

- Filter streams for custom recordings.

- Consume audio streams. For example:

- Feed audio directly into Gemini and create your own meeting AI chatbot.

- Feed audio streams generated in Meet conferences into your own transcription service

- Generate captions in various languages.

- Create model-generated sign language feeds from the captured audio.

- Create your own denoiser models to remove background and noisy artifacts from the conference.

- Consume participant metadata. For example:

- Detect which participants are in the conference, allowing for better intelligence and analytics.

Meet Media API lifecycle

The following images show the Meet Media API lifecycle:

-

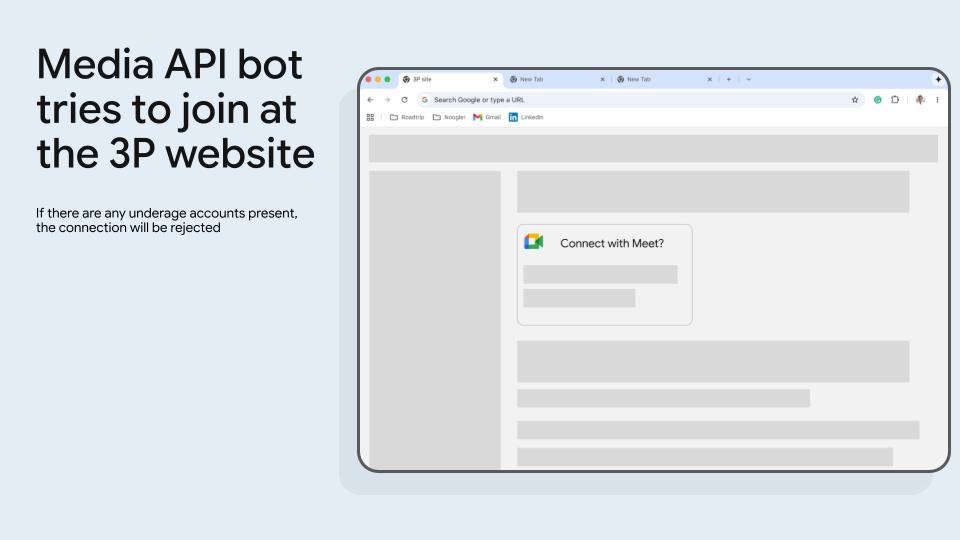

Figure 1. The Meet Media API bot tries to join at the third-party website. The connection is rejected when underage accounts are present. -

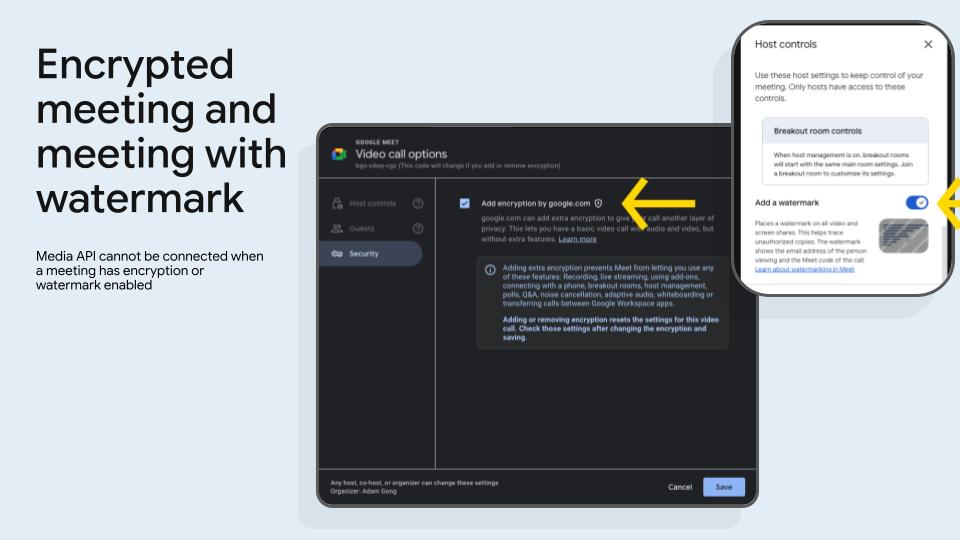

Figure 2. Meetings can be marked as encrypted and have a watermark. Meet Media API cannot be connected when a meeting has encryption or a watermark. -

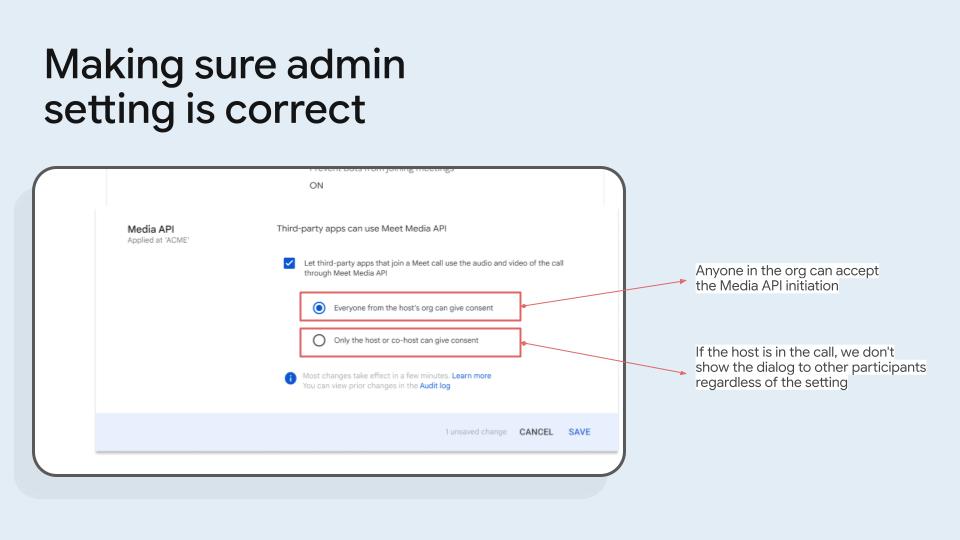

Figure 3. Make sure the administrator setting is correct. -

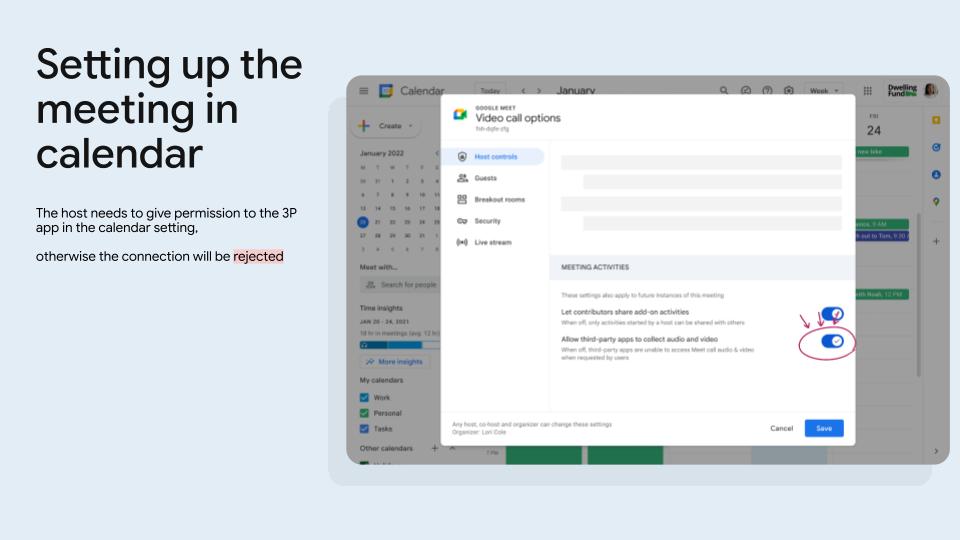

Figure 4. Set up the meeting in Calendar. The host needs to give permission to the third-party app in the Calendar setting, otherwise the connection is rejected. -

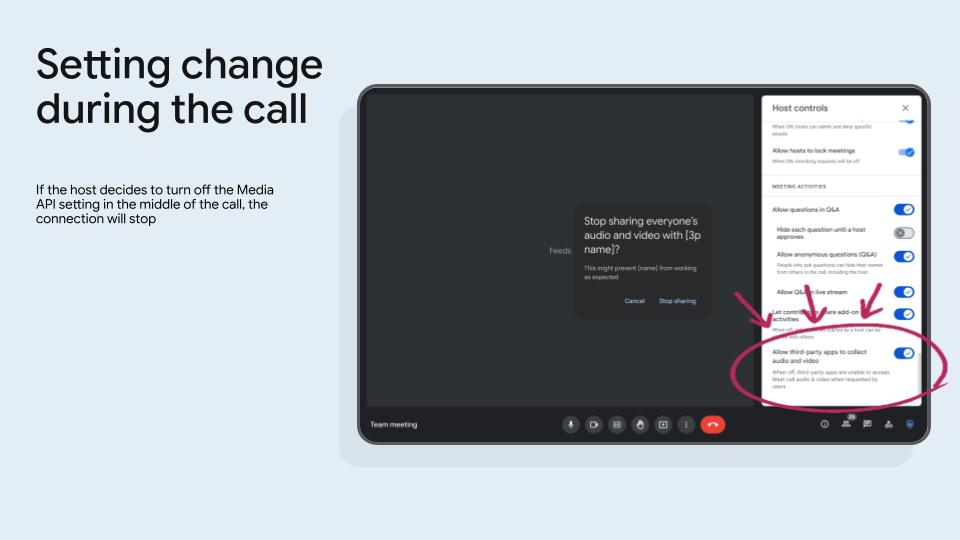

Figure 5. A setting change during the call. If the host decides to turn off the Meet Media API setting during a call, the connection stops. -

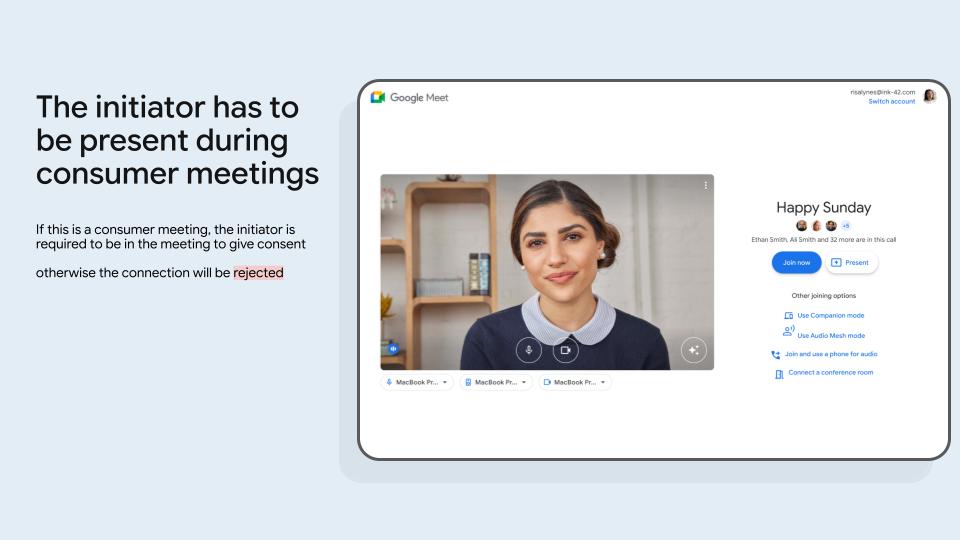

Figure 6. If the meeting owner has a consumer account (an account ending with @gmail.com), then the initiator must be present for the meeting to give consent otherwise the connection is rejected. -

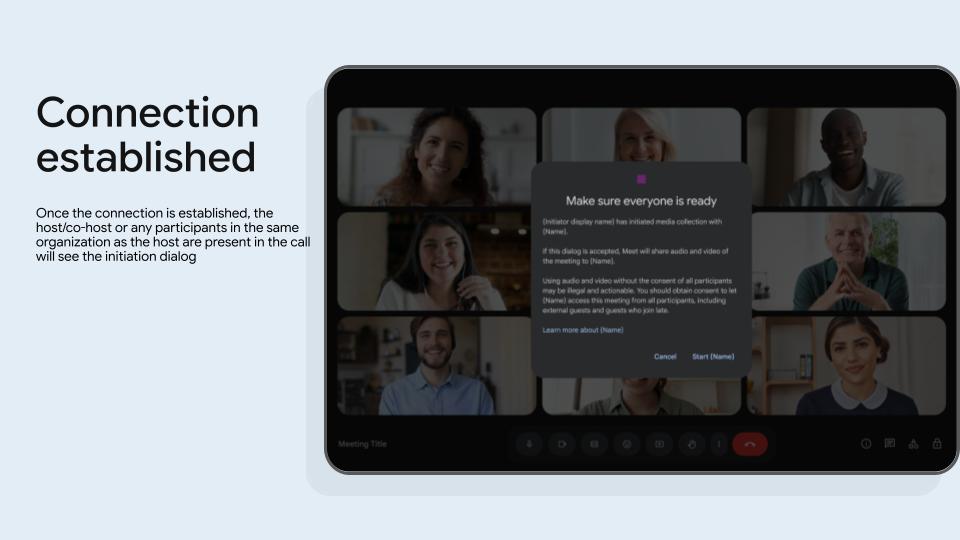

Figure 7. Once the connection is established, the host, co-host, or any participants in the same organization as the host see the initiation dialog. -

Figure 8. Anyone can stop the Meet Media API during the call.

Consenter requirements

Meet Media API apps are only permitted into a meeting if there's someone in the call that's allowed to provide consent on behalf of the meeting.

For Google Workspace meetings

In order to provide consent in Google Workspace meetings, you must be in the organization that owns the meeting. In most cases, the owner of the meeting is the same as the organizer. If the host or the initiator is in the meeting and are in the organization that owns the meeting, they're shown the start dialog preferentially.

For consumer meetings

For meetings organized by Gmail accounts, the initiator must be in the meeting to provide consent.

Common terms

- Cloud project number

- An immutable generated

int64identifier for a Google Cloud project. These values are generated by the Google Cloud console for each registered app. - Conference

- A server-generated instance of a call within a meeting space. Users typically consider this scenario a single meeting.

- Conference Resource Data Channel

Rather than requesting resources over HTTP, as with the Google Meet REST API, Meet Media API clients request resources from the server over data channels.

A dedicated data channel may be opened for each resource type. Once opened, the client can send requests over the channel. Resource updates will be transmitted over the same channel.

- Contributing Source (CSRC)

With virtual media streams, you can't assume that a media stream always points to the same participant. The CSRC value in each RTP packet's header identifies the true source of the packet.

Meet assigns each participant in a conference a unique CSRC value when they join. This value remains constant until they leave.

- Data channels

WebRTC Data Channels enable the exchange of arbitrary data (text, files, etc) independently from audio and video streams. Data Channels use the same connection as media streams, providing an efficient way to add data exchange to WebRTC applications.

- Interactive Connectivity Establishment (ICE)

A protocol for establishing connectivity, finding all possible routes for two computers to talk to each other through peer-to-peer (P2P) networking and then makes sure you stay connected.

- Media stream

A WebRTC Media Stream represents a flow of media data, typically audio or video, captured from a device like a camera or microphone. It consists of one or multiple media stream tracks, each representing a single source of media such as a video track or an audio track).

- Media stream track

Consists of a single, unidirectional flow of RTP packets. A media stream track can be audio or video, but not both. A bidirectional Secure Real-time Transport Protocol (SRTP) connection typically consists of two media stream tracks, egress from local to remote peer and ingress from remote peer to local peer.

- Meeting space

A virtual place or a persistent object (such as a meeting room) where a conference is held. Only one active conference can be held in one space at any time. A meeting space also helps users meet and find shared resources.

- Participant

A person joined to a conference or that uses Companion Mode, watching as a viewer, or a room device connected to a call. When a participant joins the conference, a unique ID is assigned.

- Relevant streams

There exists a cap on the number of Virtual Audio Streams and Virtual Video Streams a client can open.

It's quite possible for the number of participants in a conference to exceed this number. In these situations, Meet servers transmit the audio and video streams of participants deemed "most relevant." Relevancy is determined from various characteristics, such as screen sharing and how recently a participant has spoken.

- Selective Forwarding Unit (SFU)

A Selective Forwarding Unit (SFU) is a server-side component in WebRTC conferencing that manages media stream distribution. Participants connect only to the SFU, which selectively forwards relevant streams to other participants. This reduces client processing and bandwidth needs, enabling scalable conferences.

- Session Description Protocol (SDP)

The signaling mechanism WebRTC uses to negotiate a P2P connection.

RFC 8866governs it.- SDP answer

The response to a SDP offer. The answer rejects or accepts any received streams from the remote peer. It also negotiates which streams it plans to transmit back to the offering peer. It's important to note that the SDP answer can't add signaled streams from the initial offer. Anecdotally, if an offering peer signals it accepts up to three audio streams from its remote peer, this remote peer can't signal four audio streams for transmission.

- SDP offer

The initial SDP in the offer-answer peer-to-peer negotiation flow. The offer is created by the initiating peer and dictates the terms of the peer-to-peer session. The offer is always created by the Meet Media API client and submitted to Meet servers.

For example, an offer may indicate the number of audio or video streams the offerer is sending (or capable of receiving) and whether data channels are to be opened.

- Synchronization Source (SSRC)

An SSRC is a 32-bit identifier that uniquely identifies a single source of a media stream within an RTP (Real-time Transport Protocol) session. In WebRTC, SSRCs are used to distinguish between different media streams originating from different participants or even different tracks from the same participant (such as different cameras).

- RtpTransceiver

As detailed in

RFC 8829, a transceiver is an abstraction around RTP streams in a peer-to-peer session.A single transceiver is mapped to, and described by, a single media description in the SDP. A transceiver consists of a

RtpSenderand aRtpReceiver.Because RTP is bidirectional, each peer has their own transceiver instance for the same RTP connection. The

RtpSenderof a given transceiver for the local peer is mapped to theRtpReceiverof a specific transceiver in the remote peer. The inverse is also true. TheRtpSenderof the same transceiver of the remote peer is mapped to theRtpReceiverof the local peer.Every media description has its own dedicated transceiver. Hence, a peer-to-peer session with multiple RTP streams has multiple transceivers with multiple

RtpSendersandRtpReceivers for each peer.- Virtual Media Streams

Virtual Media Streams are aggregated media streams generated by a Selective Forwarding Unit (SFU) in WebRTC conferences. Instead of each participant sending individual streams to everyone else, the SFU multiplexes selected participant streams onto fewer outgoing virtual streams. This simplifies the connection topology and reduces the load on participants, enabling scalable conferences. Each virtual stream can contain media from multiple participants, dynamically managed by the SFU.

Related topics

To learn how to begin developing a Meet Media API client, follow the steps in Get started.

To learn how to set up and run a sample Meet Media API reference client, read the C++ reference client quickstart.

To get a conceptual overview, see Meet Media API concepts.

To learn more about WebRTC, see WebRTC For The Curious.

To learn about developing with Google Workspace APIs, including handling authentication and authorization, refer to Develop on Google Workspace.