Case study Zyl shrinks their app

by 50% using ML Kit.

by 50% using ML Kit.

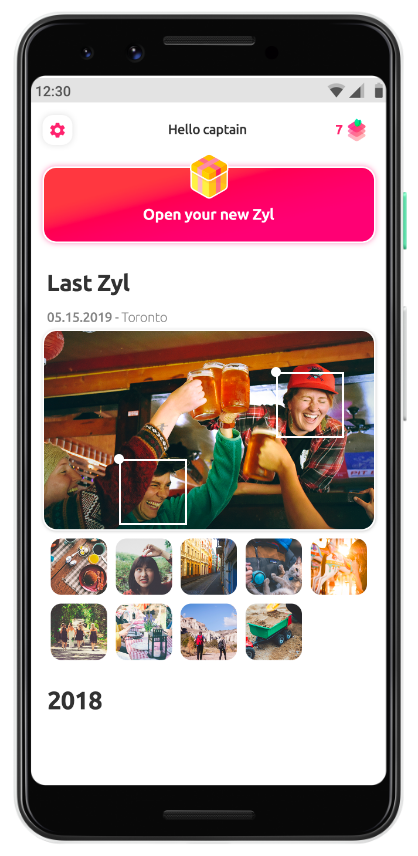

Every day, Zyl gives users a chance to rediscover one special memory and share it with their loved ones. Using image analysis, the app identifies meaningful photos, videos, and GIFs from their users’ libraries and resurfaces them one at a time.

Initially, Zyl built their own machine learning models to identify the images that would spark the most meaningful memories for their users. But since their models run directly on the user’s device, they’re also limited by common smartphone issues like battery life and huge media rolls.

So the team built a new model to extract faces and objects from the user’s media library and tried to label them in the most size- and energy-efficient way possible. But they quickly found themselves overwhelmed with a 200MB model that slowed down the app and broke the user-first experience!

What they did

Zyl implemented ML Kit’s face detection and image labeling APIs which provided just the right amount of heavy lifting to do the job without slowing down the app. “It was perfect for our needs,” said Aurelien Sibril, CTO at Zyl. “It runs fast, has a small memory footprint, and runs on-device with very good output accuracy.” Plus, by outsourcing this problem to ML Kit, the team at Zyl could spend more time on smaller machine learning models more specific to their industry and business model.

The integration was fast and easy — within just a few weeks it was in production. “Using our own model required lots of integration testing in order to make sure the mobile team and the data sciences team understood each other’s needs. Using ML Kit instead saved us weeks of integration,” said Sibril.

Results

Switching Zyl’s bulky object detection model for the ML Kit APIs had an immediate effect on app performance, which in turn increased user satisfaction. Right away, their app size shrank by 50%.

The ML Kit face detection API also runs 85 times faster in inference than their original model, freeing the team from a lot of extra processing. Now they can focus on their core product again, without worrying about maintaining a standard deep-learning functionality.