High-level overview of connected services for Attribution Reporting, aimed at technical decision makers.

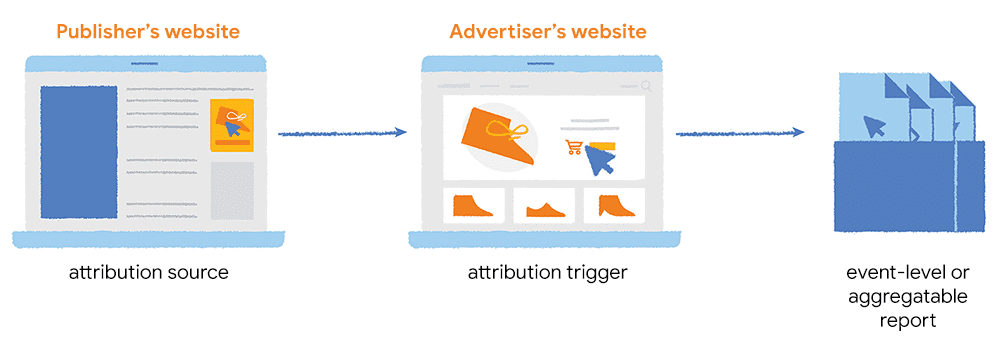

The Attribution Reporting API allows ad techs and advertisers to measure when an ad click or view leads to a conversion, such as a purchase. This API relies on a combination of client-side and server-side integrations, depending on your business needs.

Before continuing, make sure to read the Attribution Reporting overview. This will help you understand the API's purpose and the flow of the different output reports (event-level report and summary reports). If you come across unfamiliar terms, refer to the Privacy Sandbox glossary.

Who is this article for?

You should read this article if:

- You're an ad tech or advertiser's technical decision-maker. You may work in operations, DevOps, data science, IT, marketing, or another role where you make technical implementation decisions. You're wondering how the APIs work for privacy-preserving measurement.

- You're a technical practitioner (such as a developer, system operator, system architect, or data scientist) who will be setting up experiments with this API and Aggregation Service environment.

In this article, you'll read a high-level, end-to-end explanation of how the services work for the Attribution Reporting API. If you're a technical practitioner, you can experiment with this API locally.

Overview

The Attribution Reporting API consists of many services, which require specific setup, client-side configurations, and server deployments. To determine what you need, first:

- Make design decisions. Define what information you want to collect, identify what conversions you expect from any given campaign, and determine which report type to collect. The final output is one or both of the two report types: event-level reports and summary reports.

There are always two (and sometimes three) components which work together to support reporting:

- Website to browser communication. In

cookie-based systems, information for conversions and ad engagements is

attached to an identifier that allows you or an analytics service to join

these events later. With this API, the browser associates conversions with

ad clicks/views, based on your instructions, before it's delivered for

analysis. Therefore, your ad rendering code and conversion tracking must:

- Tell the browser which conversions should be attributed to which ad clicks or impressions.

- Signal any other data to include in the final reports.

- Data collection. You'll need a collector endpoint to receive the reports, generated in users' browsers. The output from browsers could be one of two possible reports: event-level reports and aggregatable reports (which are encrypted, used to generate summary reports).

If you collected aggregatable reports, you'll need a third component:

- Summary report generation. Batch aggregatable reports and use the Aggregation Service to process the reports to generate a summary report.

Design decisions

A key principle of Attribution Reporting is early design decisions. You decide what data to collect in what categories and how frequently to process that data. The output reports provide insights on your campaigns or business.

The output report can be:

- Event-level reports associate a particular ad click or view (on the ad side) with data on the conversion side. To preserve user privacy by limiting the joining of user identity across sites, conversion-side data is very limited, and the data is noisy (meaning that for a small percentage of cases, random data is sent instead of real reports).

- Summary reports are not tied to a specific event on the ad side. These reports offer more detailed conversion data and flexibility for joining click and view data with conversion data.

Your report selection determines what data you'll need to collect.

You can also think of the final output as an input for the tools you use to make decisions. For example, if you generate summary reports to determine how many conversions led to some total spend value, that may help your team decide what your next ad campaign should target to generate a higher total spend.

Once you've decided what you want to measure, you can set up the client-side for Attribution Reporting API.

Website to browser communication

Attribution event flow

Imagine a publisher site that displays ads. Each advertiser or ad-tech provider wants to learn about interactions with their ads, and attribute conversions to the correct ad. Reports (both event-level and aggregatable) would be generated as follows:

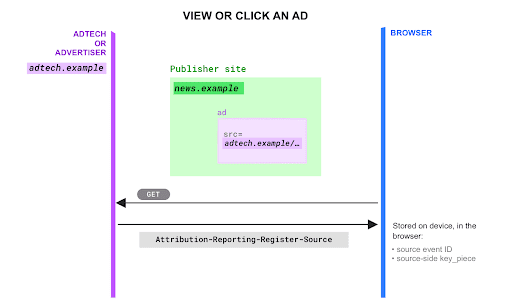

On the publisher site, an ad element (

<a>or<img>tag) is configured with a special attributeattributionsrc. Its value is a URL, for examplehttps://adtech.example/register-source/ad_id=....Here's an example of a link that will register a source once clicked:

<a href="https://shoes.example/landing" attributionsrc="http://adtech.example/register-source?..." target="_blank"> Click me</a>Here's an example of an image that will cause registration of a source when viewed:

<img href="https://advertiser.example/landing" attributionsrc="https://adtech.example/register-source?..."/>Alternatively, instead of HTML elements, JavaScript calls can be used.

Here's a JavaScript example using

window.open(). Note that the URL is url-encoded to avoid issues with special characters.const encodedUrl = encodeURIComponent( 'https://adtech.example/attribution_source?ad_id=...'); window.open( "https://shoes.example/landing", "_blank", attributionsrc=${encodedUrl});

- When the user clicks or views the ad, the browser sends a

GETrequest toattributionsrc—typically, an advertiser or ad-tech provider endpoint. Upon receiving this request, the advertiser or ad-tech provider decides to instruct the browser to register source events for interactions with the ad, so that conversions can later be attributed to this ad. To do so, the advertiser or ad-tech provider includes in its response a special HTTP header. It attaches to this header custom data that provides information about the source event (the ad click or view)—if a conversion ends up taking place for this ad, this custom data will ultimately be surfaced in the attribution report.

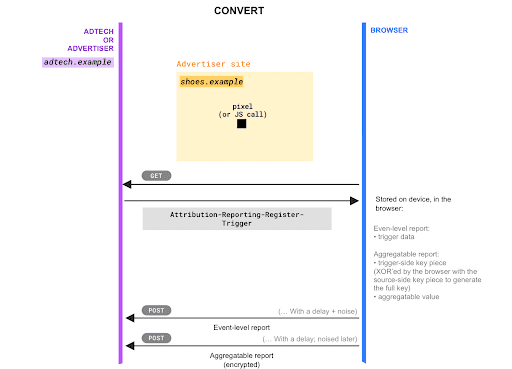

Later, the user visits the advertiser's site.

On each relevant page of the advertiser's site—for example, a purchase confirmation page, or a product page—a conversion pixel (

<img>element) or JavaScript call makes a request tohttps://adtech.example/conversion?param1=...¶m2=....The service at this URL—typically, the advertiser or ad-tech provider—receives the request. It decides to categorize this as a conversion, so it needs to instruct the browser to record a conversion—that is to trigger an attribution. To do so, the advertiser or ad-tech provider includes in its response to the pixel request a special HTTP header that includes custom data about the conversion.

The browser—on the user's local device—receives this response, and matches the conversion data with the original source event (ad click or view). Learn more in Match sources to triggers

The browser schedules a report to be sent to

attributionsrc. This report includes:- The custom attribution configuration data that the ad-tech provider or advertiser attached to the source event in Step 3.

- The custom conversion data set at Step 6.

Later, the browser sends the reports to the endpoint defined in

attributionsrc, with some delay and noise. Aggregatable reports are encrypted, while event-level reports are not.

Attribution triggers (advertiser's website)

The attribution trigger is the event that tells the browser to capture conversions.

We recommend capturing the conversions that are most important to the advertiser, such as purchases. Multiple conversion types and metadata can be captured in summary reports.

This ensures the aggregate results are detailed and accurate for these events.

Match sources to triggers

When a browser receives an attribution trigger response, the browser accesses local storage to find a source that matches both the attribution trigger's origin and that page URL's eTLD+1.

For example, when the browser receives an attribution trigger from

adtech.example on shoes.example/shoes123, the browser looks for a source in

local storage that matches both adtech.example and shoes.example.

Filters (or custom rules) can be set to determine when a trigger is matched to a specific source. For example, set a filter to count only conversions for a specific product category and ignore all other categories. Filters and prioritization models allow for more advanced attribution reporting.

If multiple attribution sources are found in local storage, the browser picks the one that was stored most recently. In some cases where attribution sources are assigned a priority, the browser will select the source with the highest priority.

Data collection

Together, an attribution trigger matched to a corresponding source, are sent as a report by the browser to a reporting endpoint on an ad tech-owned server (sometimes referred to as a collection endpoint or collection service). These reports can be event-level reports or aggregatable reports.

Aggregatable reports are used to generate summary reports. An aggregatable report is a combination of data gathered from the ad (on a publisher's site) and conversion data (from the advertiser's site) which is generated and encrypted by the browser on a user's device before it's collected by the ad tech.

Event-level reports are delayed between 2 and 30 days. Aggregatable reports are sent with a random delay within one hour and the events must fit within the contribution budget. These choices protect privacy and prevent exploitation of any individual user's actions.

If you're only interested in event-level reports, this is the last piece of infrastructure you need. However, if you want to generate summary reports, you'll need to process the aggregatable reports with an additional service.

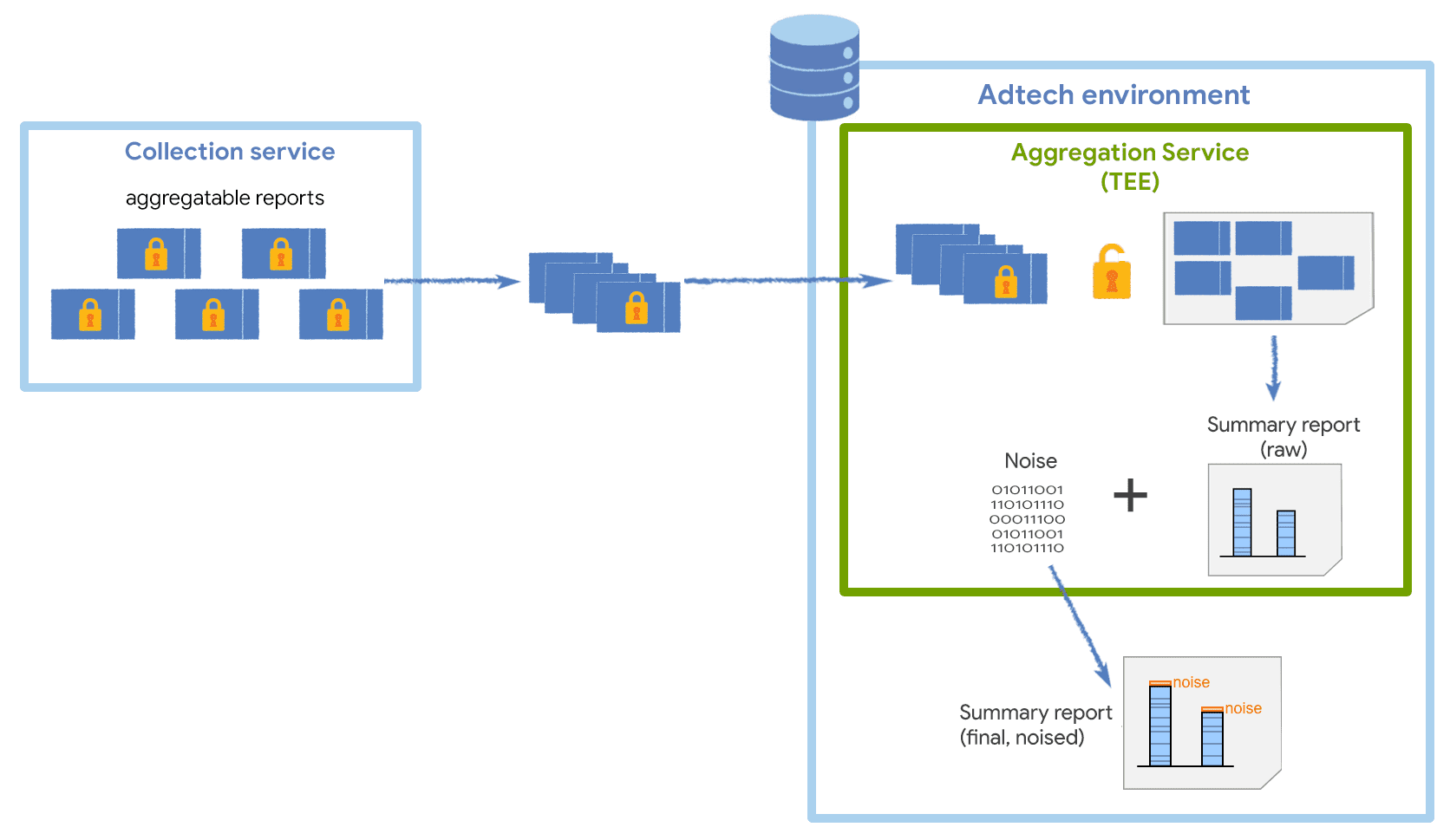

Summary report generation

To generate summary reports, you'll use the Aggregation Service (operated by the ad tech) to process the aggregatable reports. The Aggregation Service adds noise to protect user privacy and returns the final summary report.

After batching the collected aggregatable reports the batch is processed by the Aggregation Service. A coordinator gives the decryption keys only to attested versions of the Aggregation Service. The Aggregation Service then decrypts the data, aggregates it, and add noise before returning the results as a summary report.

Batched aggregatable reports

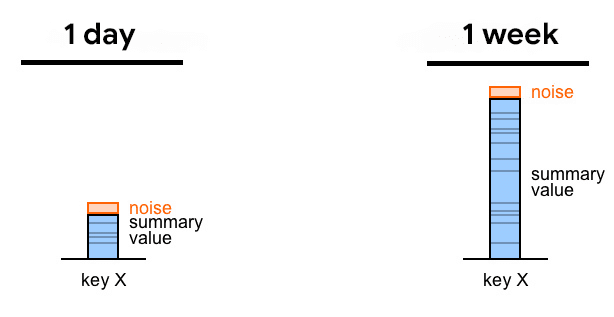

Before the aggregatable reports are processed, they must be batched. A batch consists of strategically grouped aggregatable reports. Your strategy will most likely be reflective of a specific time period (such as daily or weekly). This process can take place on the same server which acts as your reporting endpoint.

Batches should contain many reports to ensure the signal-to-noise ratio is high.

Batch periods can change at any time to ensure you capture specific events where you expect higher volume, such as for an annual sale. The batching period can be changed without needing to change attribution sources or triggers.

Aggregation Service

The Aggregation Service is responsible for processing aggregatable reports to generate a summary report. Aggregatable reports are encrypted and can only be read by the Aggregation Service, which runs on a trusted execution environment (TEE).

The Aggregation Service requests decryption keys from the coordinator to decrypt and aggregate the data. Once decrypted and aggregated, the results are noised to preserve privacy and returned as a summary report.

Practitioners can generate aggregatable cleartext reports to test the Aggregation Service locally. Or, you can test with encrypted reports on AWS with Nitro Enclaves.

What's next?

We want to engage in conversations with you to ensure we build an API that works for everyone.

Discuss the API

Like other Privacy Sandbox APIs, this API is documented and discussed publicly.

Experiment with the API

You can experiment and participate in conversation about the Attribution Reporting API.