As you read through the Privacy Sandbox on Android documentation, use the Developer Preview or Beta button to select the program version that you're working with, as instructions may vary.

The Attribution Reporting API is designed to support key use cases for attribution and conversion measurement across apps and the web without reliance on cross-party user identifiers. Compared to common designs today, the Attribution Reporting API implementers should factor in some important high-level considerations:

- Event-level reports include low-fidelity conversion data. A small number of conversion values work well.

- Aggregatable reports include higher-fidelity conversion data. Your solutions should design aggregation keys based on your business requirements and the 128-bit limit.

- Your solution's data models and processing should factor in rate limits for available triggers, time delays for sending trigger events, and noise applied by the API.

To help you with integration planning, this guide provides a comprehensive view, which may include features that are not yet implemented at the current stage of the Privacy Sandbox on Android Developer Preview. In these cases, timeline guidance is provided.

On this page, we use source to represent either a click or a view, and trigger to represent a conversion.

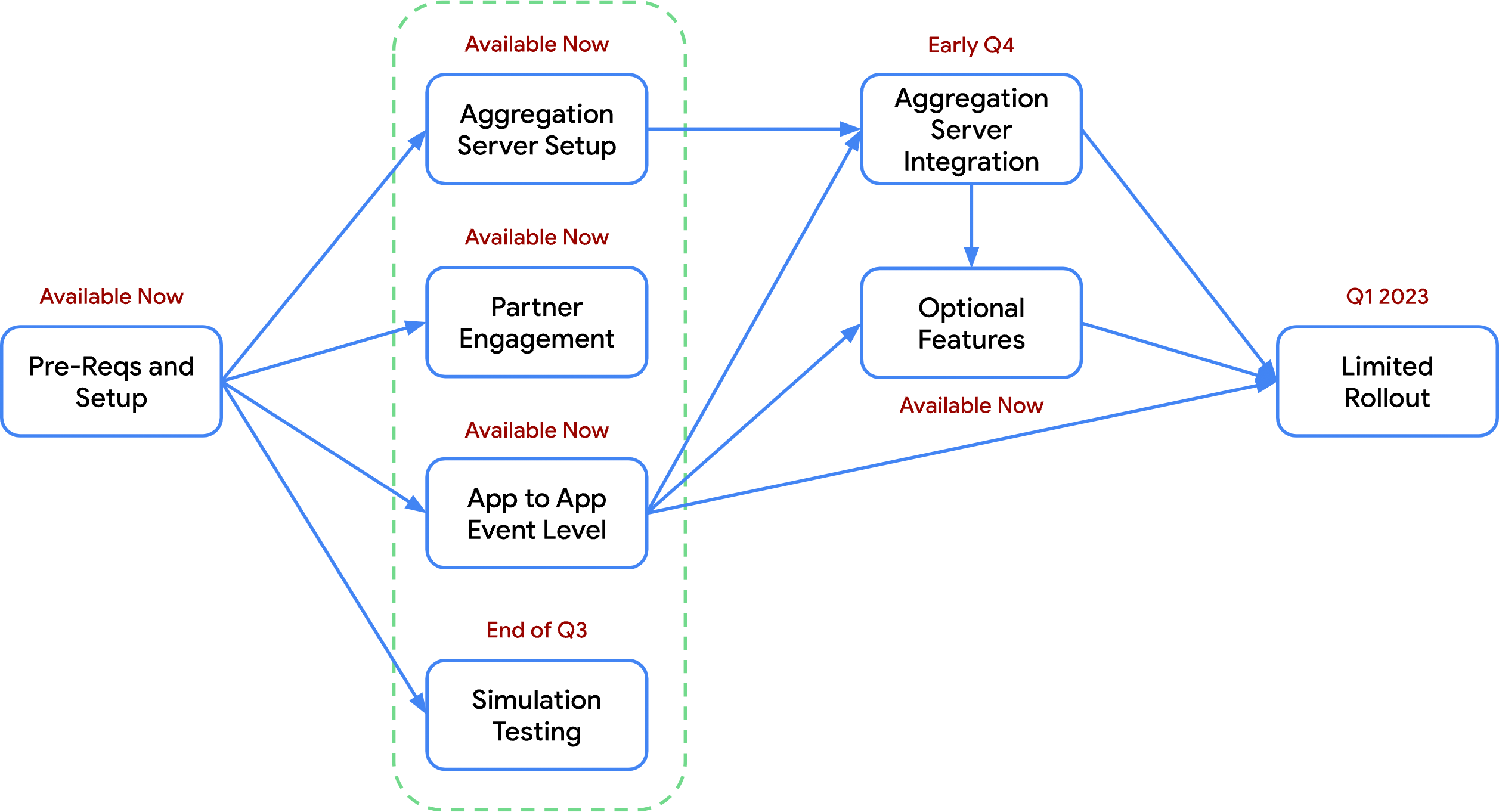

The chart below displays the different workflow options for attribution integration. Sections listed in the same column (circled in green) can be worked on in parallel; for example, partner engagement can be done at the same time as app-to-app event-level attribution.

Figure 1. The attribution integration workflow.

Prerequisites and setup

Complete the steps in this section to improve your understanding of the Attribution Reporting API. These steps will set you up to gather meaningful results when using the API in the ad tech ecosystem.

Familiarize with the API

- Read the design proposal to familiarize yourself with the Attribution Reporting API and its capabilities.

- Read the developer guide to learn how to incorporate the code and API calls that you will need for your use cases.

- Sign up to receive updates on the Attribution Reporting API. This will help you stay current on new features that are introduced in future releases.

Set up and test the sample app

- Once you are ready to begin your integration, get yourself set up with the latest Developer Preview in Android Studio.

- Set up mock server endpoints for event registrations and report deliveries. We have provided mocks that you can use in tandem with tools available online.

- Download and run the code in our sample app to familiarize yourself with registering sources and triggers.

- Set the time window for sending reports. The API supports windows of 2 days, 7 days, or a custom period between 2 and 30 days.

- Once you have registered sources and triggers by running and using the sample app, and that the set time period has passed, verify that you have received an event-level report and an encrypted aggregatable report. If you need to debug reports, you can generate them more quickly by force-running reporting jobs.

- Review the results for app-to-app attribution. Confirm that the data in these results is as expected for both last-touch and post-install cases.

- After you have a feel for how the client API and server work together, use the sample app as an example to guide your own integration. Set up your own production server and add event registration calls to your apps.

Pre-integration

Enroll your organization with the Privacy Sandbox on Android. This enrollment is designed to prevent unnecessary duplication of ad tech platforms, which would allow access to more information than necessary on the user's activities.

Partner engagement

Ad tech partners (MMP/SSP/DSP) often create integrated attribution solutions. The steps in this section help you prepare for success in engaging with your adtech partners.

- Schedule a discussion with your top measurement partners to discuss testing and adoption of the Attribution Reporting API. Measurement partners can include ad tech networks, SSPs, DSPs, advertisers, or any other partner that you currently work with or would like to work with.

- Collaborate with your measurement partners to define timelines for integration, from initial testing to adoption.

- Clarify with your measurement partners which areas each of you will cover in attribution design.

- Establish channels of communication between measurement partners to sync on timelines and end-to-end testing.

- Design high-level data flows across measurement partners. Key considerations

include the following:

- How will measurement partners register attribution sources with the Attribution Reporting API?

- How will ad tech networks register triggers with the Attribution Reporting API?

- How will each ad tech validate API requests and return responses to complete source and trigger registrations?

- Are there any reports that need to be shared across partners outside of the Attribution Reporting API?

- Are there any other integration points or alignment needed across partners? For example, do you and your partners need to work on deduplicating conversions, or align on aggregation keys?

- If app-to-web attribution is applicable, schedule a discussion with measurement partners on web to discuss design, testing, and adoption of the Attribution Reporting API. Refer to the questions in the previous step as you begin conversations with web partners.

Prototype app-to-app event-level attribution

This section helps you set up a basic app-to-app attribution with event-level reports in your app or SDK. Completion of this section is required before you can begin prototyping aggregation server attribution.

- Set up a collection server for event records. You can do this by using the provided spec to generate a mock server, or set up your own server with the sample server code.

- Add register source event calls to your SDK or app when ads are shown.

- Critical considerations include the following:

- Ensure that source event IDs are available and passed correctly to the source registration API calls.

- Make sure you can also pass in an `InputEvent` to register click sources.

- Determine how you will configure source priority for different types of events. For example, assign a high priority to events that are considered high value, such as clicks over views.

- The default value for expiry is OK for testing. Alternatively, different expiration windows can be configured.

- Filters and attribution windows can be left as defaults for testing.

- Optional considerations include the following:

- Design aggregation keys if you are ready for them.

- Consider your redirect strategy when you establish how you want to work with other measurement partners.

- Critical considerations include the following:

- Add register trigger events to your SDK or app to record conversion

events.

- Critical considerations include the following:

- Define trigger data, considering the limited fidelity returned: How are you going to reduce the number of conversion types your advertisers need for the 3 bits available for clicks, and the 1 bit available for views?

- Limits on available triggers in event reports: How do you plan to reduce the number of total conversions per source you can receive in event reports?

- Optional considerations include the following:

- Skip creating deduplication keys until you are doing accuracy tests.

- Skip creating aggregation keys and values until simulation testing support is ready.

- Skip redirects until you establish how you want to work with other measurement partners.

- Trigger priority is not essential for testing.

- Filters can likely be ignored for initial testing.

- Critical considerations include the following:

- Test that source events are being generated for ads, and that triggers are leading for the creation of event reports.

Simulation testing

This section will walk you through testing the impact that moving your current conversions to event and aggregatable reports is likely to have on reporting and optimization systems. This will allow you to start impact testing before finishing your integration.

Testing is done by simulating the generation of event and aggregatable reports based on historical conversion records you have, and then getting the aggregated results from a simulated aggregation server. These results can be compared with historical conversion numbers to see how reporting accuracy would change.

Optimization models, such as predicted conversion rate calculations, can be trained on these reports to compare the accuracy of these models compared with the ones built on current data. This is also a chance to experiment with different aggregation key structures and their impact on results.

- Set up the Measurement Simulation Library on a local machine.

- Read the spec on how your conversion data must be formatted to be compatible with the simulated report generator.

- Design your aggregation keys based on business requirements.

- Critical considerations include the following:

- Consider the critical dimensions your clients or partners need to aggregate and focus your evaluation on those.

- Determine the minimum number of aggregate dimensions and cardinalities needed for your requirements.

- Ensure that source- and trigger-side key pieces don't exceed 128 bits.

- If your solutions involve contributing to multiple values per trigger event, be sure to scale the values against the maximum contribution budget, L1. This will help minimize the impact of noise.

- Here is an example that details setting a key to collect aggregate conversion counts at a campaign level, and a key to collect aggregate purchase values at a geo level.

- Critical considerations include the following:

- Run the report generator to create event and aggregatable reports.

- Run the aggregatable reports through the simulated aggregation servers to get summary reports.

- Perform utility experiments:

- Compare conversion totals from event-level and summary reports with historical conversion data to determine conversion reporting accuracy. For best results, run the reporting tests and comparisons on a broad, representative portion of the advertiser base.

- Retrain your models based on event-level report data, and potentially summary report data. Compare accuracy with models built on historical training data.

- Try different batching strategies and see how they impact your results.

- Critical considerations include the following:

- Timeliness of summary reports for adjusting bids.

- Average frequencies of attributable events on the device. For example, lapsed users coming back based on historical purchase events data.

- Noise level. More batches means smaller aggregation, and smaller aggregation means more noise is applied.

Prototype aggregation server attribution: Setup

These steps will ensure you are able to receive aggregatable reports of your source and trigger events.

- Set up your aggregation server:

- Set up your AWS account.

- Enroll in the aggregation service with your coordinator.

- Set up your aggregation server on AWS from provided binaries.

- Design your aggregation keys based on business requirements. If you have already completed this task in the app-to-app event-level section, you may skip this step.

- Set up a collection server for aggregatable reports. If you have already created one in the app-to-app event-level section, you may reuse it.

Prototype aggregation server attribution: Integration

To proceed past this point, you must have completed the Prototype aggregation server attribution: Setup section, or the Prototype App to App Event-Level Attribution section**.

- Add aggregation key data to your source and trigger events. This will likely require passing more data about the ad event, such as the campaign ID, into your SDK or app to include in the aggregation key.

- Collect app-to-app aggregatable reports from the source and trigger events that you registered with aggregation key data.

- Test different batching strategies as you run these aggregatable reports through the aggregation server, and see how they impact your results.

Iterate design with optional features

The following are additional features that you can include in your measurement solution.

Use the Debug API to generate debug keys (highly recommended)

- Setting a debug key will allow you to receive an unaltered report of a source or trigger event along with the reports generated by the Attribution Reporting API. You can use debug keys to compare reports and find bugs during integration.

Customize attribution behaviors

- Attribution for post install triggers

- This feature can be used in the case where post-install triggers need to be attributed to the same attribution source that drove the install, even if there are other eligible attribution sources that occurred more recently.

- For example, there may be a case where a user clicks an ad that drives an install. Once installed, the user clicks another ad and makes a purchase. In this case, the ad tech company may want the purchase to be attributed to the first click rather than the re-engagement click.

- Use filters to fine tune the data in your event-level reports

- Conversion filters can be set to ignore selected triggers and exclude them from event reports. Because there are limits on the number of triggers per attribution source, the filters allow you to only include the triggers that provide the most useful information in your event reports.

- Filters can also be used to selectively filter out some triggers, effectively ignoring them. For example, if you have a campaign targeting app installs, you may want to filter out post-install triggers from being attributed to sources from that campaign.

- Filters can also be used to customize trigger data based on source data. For

example, a source can specify

"product" : ["1234"]where product is the filter key and 1234 is the value. Any trigger with a filter key of "product" that has a value other than "1234" is ignored.

- Customized source and trigger priority

- In the case that multiple attribution sources can be associated with a trigger, or multiple triggers can be attributed to a source, you can use a signed 64-bit integer to prioritize certain source/trigger attributions over others.

Working with MMPs and others

- Redirects to other third parties for source and trigger events

- You can set redirect URLs to allow multiple ad tech platforms to register a request. This can be used to enable cross-network deduplication in attribution.

- Deduplication keys

- When an advertiser uses multiple ad tech platforms to register the same trigger event, a deduplication key can be used to disambiguate these repeated reports. If no deduplication key is provided, duplicate triggers may be reported back to each ad tech platform as unique.

Working with cross-platform measurement

- Cross app and web attribution (available in late Q4)

- Supports use cases where a user sees an ad in an app, then converts in a mobile or app browser, or vice-versa.

Recommended for you

- Note: link text is displayed when JavaScript is off

- Attribution reporting

- Attribution reporting: cross app and web measurement