Summary

Learn how we used service worker libraries to make the Google I/O 2015 web app fast, and offline-first.

Overview

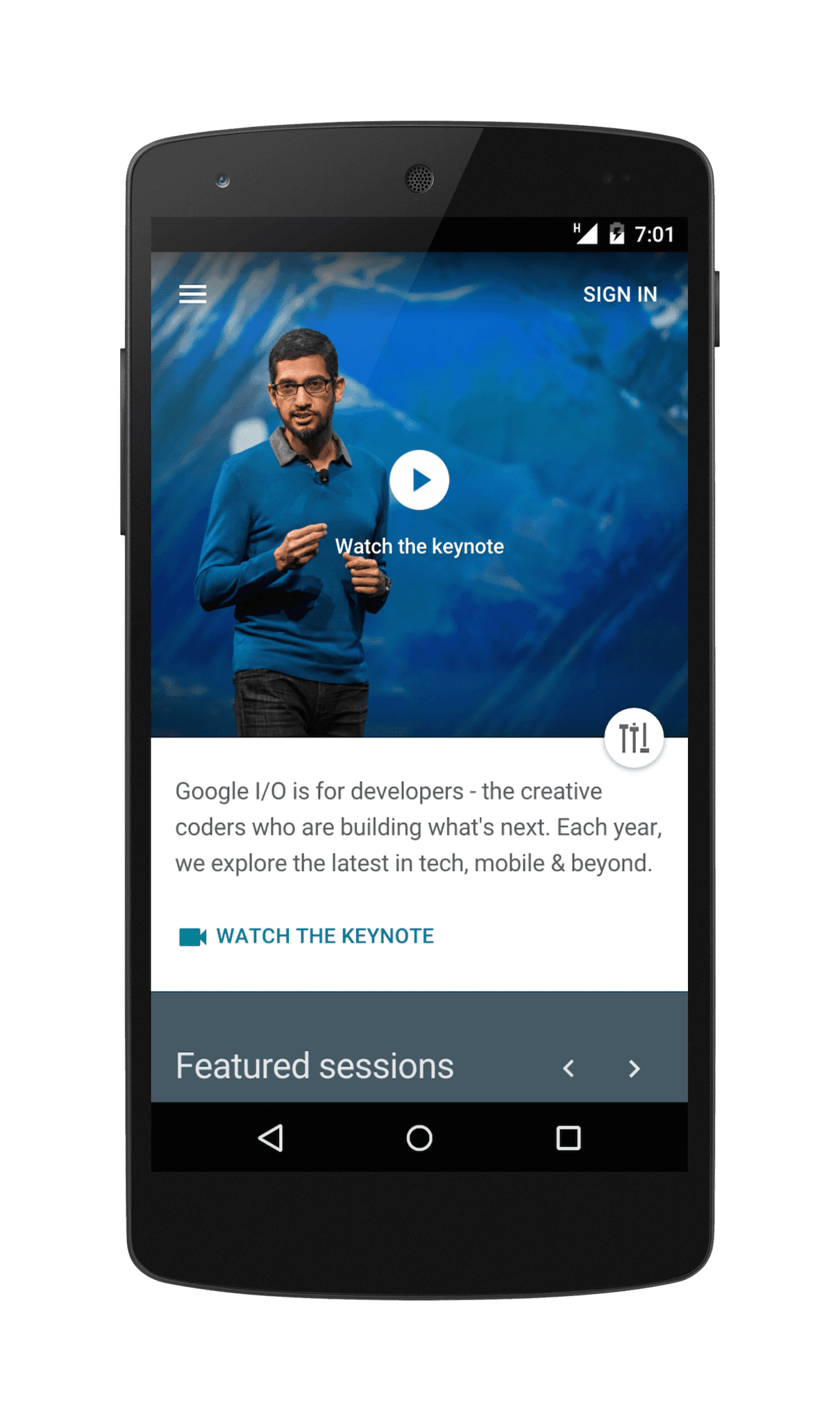

This year’s Google I/O 2015 web app was written by Google’s Developer Relations team, based on designs by our friends at Instrument, who wrote the nifty audio/visual experiment. Our team’s mission was to ensure that the I/O web app (which I’ll refer to by its codename, IOWA) showcased everything the modern web could do. A full offline-first experience was at the top of our list of must-have features.

If you’ve read any of the other articles on this site recently, you’ve

undoubtedly encountered service workers,

and you won’t be surprised to hear that IOWA’s offline support is heavily

reliant on them. Motivated by the real-world needs of IOWA, we developed two

libraries to handle two different offline use cases:

sw-precache to automate

precaching of static resources, and

sw-toolbox to handle

runtime caching and fallback strategies.

The libraries complement each other nicely, and allowed us to implement a performant strategy in which IOWA’s static content “shell” was always served directly from the cache, and dynamic or remote resources were served from the network, with fallbacks to cached or static responses when needed.

Precaching with sw-precache

IOWA’s static resources—its HTML, JavaScript, CSS, and images—provide the core

shell for the web application. There were two specific requirements that were

important when thinking about caching these resources: we wanted to make sure

that most static resources were cached, and that they were kept up to date.

sw-precache was built with those

requirements in mind.

Build-time Integration

sw-precache with IOWA’s gulp-based build process,

and we rely on a series of glob patterns

to ensure that we generate a complete list of all the static resources IOWA uses.

staticFileGlobs: [

rootDir + '/bower_components/**/*.{html,js,css}',

rootDir + '/elements/**',

rootDir + '/fonts/**',

rootDir + '/images/**',

rootDir + '/scripts/**',

rootDir + '/styles/**/*.css',

rootDir + '/data-worker-scripts.js'

]

Alternative approaches, like hard coding a list of file names into an array, and remembering to bump a cache version number each time any of those files changes were far too error prone, especially given that we had multiple team members checking in code. No one wants to break offline support by leaving out a new file in a manually maintained array! Build-time integration meant we could make changes to existing files and add new files without having those worries.

Updating Cached Resources

sw-precache generates a base service worker script

that includes a unique MD5 hash for each

resource that gets precached. Each time an existing resource changes,

or a new resource is added, the service worker script is regenerated. This

automatically triggers the service worker update flow,

in which the new resources are cached and out of date resources are purged.

Any existing resources that have identical MD5 hashes are left as-is. That

means users who have visited the site before only end up downloading the

minimal set of changed resources, leading to a much more efficient experience

than if the entire cache was expired en masse.

Each file that matches one of the glob patterns is downloaded and cached the

first time a user visits IOWA. We made an effort to ensure that only critical

resources needed to render the page were precached. Secondary content, like the

media used in the audio/visual experiment,

or the profile images of the sessions’ speakers, were deliberately not

precached, and we instead used the sw-toolbox

library to handle offline requests for those resources.

sw-toolbox, for All Our Dynamic Needs

As mentioned, precaching every resource that a site needs to work offline isn’t

feasible. Some resources are too large or infrequently used to make it

worthwhile, and other resources are dynamic, like the responses from a remote

API or service. But just because a request isn’t precached doesn’t mean it has

to result in a NetworkError.

sw-toolbox gave us the

flexibility to implement request handlers

that handle runtime caching for some resources and custom fallbacks for

others. We also used it to update our previously cached resources in response

to push notifications.

Here are a few examples of custom request handlers that we built on top of

sw-toolbox. It was easy to integrate them with the base service worker script

via sw-precache’s importScripts parameter,

which pulls standalone JavaScript files into the scope of the service worker.

Audio/Visual Experiment

For the audio/visual experiment,

we used sw-toolbox’s networkFirst

cache strategy. All HTTP requests matching the URL pattern for the experiment

would first be made against the network, and if a successful response was

returned, that response would then be stashed away using the

Cache Storage API.

If a subsequent request was made when the network was unavailable, the

previously cached response would be used.

Because the cache was automatically updated each time a successful network response came back, we didn’t have to specifically version resources or expire entries.

toolbox.router.get('/experiment/(.+)', toolbox.networkFirst);

Speaker Profile Images

For speaker profile images, our goal was to display a previously cached version of a

given speaker’s image if it was available, falling back to the network to retrieve the

image if it wasn’t. If that network request failed, as a final fallback, we used a

generic placeholder image that was precached (and therefore would always be

available). This is a common strategy to use when dealing with images that

could be replaced with a generic placeholder, and it was easy to implement by

chaining sw-toolbox’s

cacheFirst and

cacheOnly handlers.

var DEFAULT_PROFILE_IMAGE = 'images/touch/homescreen96.png';

function profileImageRequest(request) {

return toolbox.cacheFirst(request).catch(function() {

return toolbox.cacheOnly(new Request(DEFAULT_PROFILE_IMAGE));

});

}

toolbox.precache([DEFAULT_PROFILE_IMAGE]);

toolbox.router.get('/(.+)/images/speakers/(.*)',

profileImageRequest,

{origin: /.*\.googleapis\.com/});

Updates to Users’ Schedules

One of the key features of IOWA was allowing signed-in users to create and

maintain a schedule of sessions they planned on attending. Like you’d expect,

session updates were made via HTTP POST requests to a backend server, and we

spent some time working out the best way to handle those state-modifying

requests when the user is offline. We came up with a combination of a

that queued failed requests in IndexedDB, coupled with logic in the main web page

that checked IndexedDB for queued requests and retried any that it found.

var DB_NAME = 'shed-offline-session-updates';

function queueFailedSessionUpdateRequest(request) {

simpleDB.open(DB_NAME).then(function(db) {

db.set(request.url, request.method);

});

}

function handleSessionUpdateRequest(request) {

return global.fetch(request).then(function(response) {

if (response.status >= 500) {

return Response.error();

}

return response;

}).catch(function() {

queueFailedSessionUpdateRequest(request);

});

}

toolbox.router.put('/(.+)api/v1/user/schedule/(.+)',

handleSessionUpdateRequest);

toolbox.router.delete('/(.+)api/v1/user/schedule/(.+)',

handleSessionUpdateRequest);

Because the retries were made from the context of the main page, we could be sure that they included a fresh set of user credentials. Once the retries succeeded, we displayed a message to let the user know that their previously queued updates had been applied.

simpleDB.open(QUEUED_SESSION_UPDATES_DB_NAME).then(function(db) {

var replayPromises = [];

return db.forEach(function(url, method) {

var promise = IOWA.Request.xhrPromise(method, url, true).then(function() {

return db.delete(url).then(function() {

return true;

});

});

replayPromises.push(promise);

}).then(function() {

if (replayPromises.length) {

return Promise.all(replayPromises).then(function() {

IOWA.Elements.Toast.showMessage(

'My Schedule was updated with offline changes.');

});

}

});

}).catch(function() {

IOWA.Elements.Toast.showMessage(

'Offline changes could not be applied to My Schedule.');

});

Offline Google Analytics

In a similar vein, we implemented a handler to queue any failed Google

Analytics requests and attempt to replay them later, when the network was

hopefully available. With this approach, being offline doesn’t mean sacrificing

the insights Google Analytics offers. We added the qt

parameter to each queued request, set to the amount of time that had passed

since the request was first attempted, to ensure that a proper event

attribution time made it to the Google Analytics backend. Google Analytics

officially supports

values for qt of up to only 4 hours, so we made a best-effort attempt to replay those

requests as soon as possible, each time the service worker started up.

var DB_NAME = 'offline-analytics';

var EXPIRATION_TIME_DELTA = 86400000;

var ORIGIN = /https?:\/\/((www|ssl)\.)?google-analytics\.com/;

function replayQueuedAnalyticsRequests() {

simpleDB.open(DB_NAME).then(function(db) {

db.forEach(function(url, originalTimestamp) {

var timeDelta = Date.now() - originalTimestamp;

var replayUrl = url + '&qt=' + timeDelta;

fetch(replayUrl).then(function(response) {

if (response.status >= 500) {

return Response.error();

}

db.delete(url);

}).catch(function(error) {

if (timeDelta > EXPIRATION_TIME_DELTA) {

db.delete(url);

}

});

});

});

}

function queueFailedAnalyticsRequest(request) {

simpleDB.open(DB_NAME).then(function(db) {

db.set(request.url, Date.now());

});

}

function handleAnalyticsCollectionRequest(request) {

return global.fetch(request).then(function(response) {

if (response.status >= 500) {

return Response.error();

}

return response;

}).catch(function() {

queueFailedAnalyticsRequest(request);

});

}

toolbox.router.get('/collect',

handleAnalyticsCollectionRequest,

{origin: ORIGIN});

toolbox.router.get('/analytics.js',

toolbox.networkFirst,

{origin: ORIGIN});

replayQueuedAnalyticsRequests();

Push Notification Landing Pages

Service workers didn’t just handle IOWA’s offline functionality—they also powered the push notifications that we used to notify users about updates to their bookmarked sessions. The landing page associated with those notifications displayed the updated session details. Those landing pages were already being cached as part of the overall site, so they already worked offline, but we needed to make sure that the session details on that page were up to date, even when viewed offline. To do that, we modified previously cached session metadata with the updates that triggered the push notification, and we stored the result in the cache. This up-to-date info will be used the next time the session details page is opened, whether that takes place online or offline.

caches.open(toolbox.options.cacheName).then(function(cache) {

cache.match('api/v1/schedule').then(function(response) {

if (response) {

parseResponseJSON(response).then(function(schedule) {

sessions.forEach(function(session) {

schedule.sessions[session.id] = session;

});

cache.put('api/v1/schedule',

new Response(JSON.stringify(schedule)));

});

} else {

toolbox.cache('api/v1/schedule');

}

});

});

Gotchas & Considerations

Of course, no one works on a project of IOWA’s scale without running into a few gotchas. Here are some of the ones we ran into, and how we worked around them.

Stale Content

Whenever you’re planning a caching strategy, whether implemented via service

workers or with the standard browser cache, there’s a tradeoff between

delivering resources as quickly as possible versus delivering the freshest

resources. Via sw-precache, we implemented an aggressive cache-first

strategy for our application’s shell, meaning our service worker would not check the

network for updates before returning the HTML, JavaScript, and CSS on the page.

Fortunately, we were able to take advantage of service worker lifecycle events to detect when new content was available after the page had already loaded. When an updated service worker is detected, we display a toast message to the user letting them know that they should reload their page to see the newest content.

if (navigator.serviceWorker && navigator.serviceWorker.controller) {

navigator.serviceWorker.controller.onstatechange = function(e) {

if (e.target.state === 'redundant') {

var tapHandler = function() {

window.location.reload();

};

IOWA.Elements.Toast.showMessage(

'Tap here or refresh the page for the latest content.',

tapHandler);

}

};

}

Make Sure Static Content is Static!

sw-precache uses an MD5 hash of local files’ contents, and only fetches

resources whose hash has changed. This means that resources are available on the page

almost immediately, but it also means that once something is cached, it’s going to

stay cached until it’s assigned a new hash in an updated service worker script.

We ran into an issue with this behavior during I/O due to our backend needing to dynamically update the livestream YouTube video IDs for each day of the conference. Because the underlying template file was static and didn’t change, our service worker update flow wasn’t triggered, and what was meant to be a dynamic response from the server with updating YouTube videos ended up being the cached response for a number of users.

You can avoid this type of issue by making sure your web application is structured so that the shell is always static and can be safely precached, while any dynamic resources which modify the shell are loaded independently.

Cache-bust Your Precaching Requests

When sw-precache makes requests for resources to precache, it uses those

responses indefinitely as long as it thinks that the MD5 hash for the file hasn’t

changed. This means that it’s particularly important to make sure that the response to

the precaching request is a fresh one, and not returned from the browser’s HTTP

cache. (Yes, fetch() requests made in a service worker can respond with

data from the browser’s HTTP cache.)

To ensure that responses we precache are straight from the network and not the

browser’s HTTP cache, sw-precache automatically

appends a cache-busting query parameter

to each URL it requests. If you’re not using sw-precache and you are

making use of a cache-first response strategy, make sure that you

do something similar

in your own code!

A cleaner solution to cache-busting would be to set the

cache mode

of each Request used for precaching to reload, which will ensure that the

response comes from the network. However, as of this writing, the cache mode

option isn’t supported

in Chrome.

Support for Logging In & Out

IOWA allowed users to log in using their Google Accounts and update their customized event schedules, but that also meant that users might later log out. Caching personalized response data is obviously a tricky topic, and there’s not always a single right approach.

Since viewing your personal schedule, even when offline, was core to the IOWA experience, we decided that using cached data was appropriate. When a user signs out, we made sure to clear previously cached session data.

self.addEventListener('message', function(event) {

if (event.data === 'clear-cached-user-data') {

caches.open(toolbox.options.cacheName).then(function(cache) {

cache.keys().then(function(requests) {

return requests.filter(function(request) {

return request.url.indexOf('api/v1/user/') !== -1;

});

}).then(function(userDataRequests) {

userDataRequests.forEach(function(userDataRequest) {

cache.delete(userDataRequest);

});

});

});

}

});

Watch Out for Extra Query Parameters!

When a service worker checks for a cached response, it uses a request URL as the key. By default, the request URL must exactly match the URL used to store the cached response, including any query parameters in the search portion of the URL.

This ended up causing an issue for us during development, when we started using

URL parameters to keep track of where our

traffic was coming from. For example, we added

the utm_source=notification parameter to URLs that were opened when clicking on one of our

notifications, and used utm_source=web_app_manifest in the start_url

for our web app manifest.

URLs which previously matched cached responses were coming up as misses when those parameters

were appended.

This is partially addressed by the ignoreSearch

option which can be used when calling Cache.match(). Unfortunately, Chrome doesn't yet

support ignoreSearch, and even if it did, it's an all-or-nothing behavior. What we needed was a

way to ignore some URL query parameters while taking others that were meaningful into account.

We ended up extending sw-precache to strip out some query parameters before checking for a cache

match, and allow developers to customize which parameters are ignored via the

ignoreUrlParametersMatching option.

Here's the underlying implementation:

function stripIgnoredUrlParameters(originalUrl, ignoredRegexes) {

var url = new URL(originalUrl);

url.search = url.search.slice(1)

.split('&')

.map(function(kv) {

return kv.split('=');

})

.filter(function(kv) {

return ignoredRegexes.every(function(ignoredRegex) {

return !ignoredRegex.test(kv[0]);

});

})

.map(function(kv) {

return kv.join('=');

})

.join('&');

return url.toString();

}

What This Means for You

The service worker integration in the Google I/O Web App is likely the most

complex, real-world usage that has been deployed to this point. We’re looking

forward to the web developer community using the tools we created

sw-precache and

sw-toolbox as well as the

techniques we’re describing to power your own web applications.

Service workers are a progressive enhancement

that you can start using today, and when used as part of a properly structured

web app, the speed and offline benefits are significant for your users.