Interactive Canvas is a framework built on the Google Assistant that allows developers to add visual, immersive experiences to conversational Actions. This visual experience is an interactive web app that the Assistant sends as a response to the user in conversation. Unlike traditional rich responses that exist in-line in an Assistant conversation, the Interactive Canvas web app renders as a full-screen web view.

You should use Interactive Canvas if you want to do any of the following in your Action:

- Create full-screen visuals

- Create custom animations and transitions

- Do data visualization

- Create custom layouts and GUIs

Supported devices

Interactive Canvas is currently available on the following devices:

- Smart Displays

- Google Nest Hubs

Android mobile devices

How it works

An Action that uses Interactive Canvas works similarly to a regular conversational Action. The user still has a back-and-forth conversation with the Assistant to fulfill their goal; however, instead of returning responses in-line in the conversation, an Interactive Canvas Action sends a response to the user that opens a full-screen web app. The user continues to interact with the web app through voice or touch until the conversation is over.

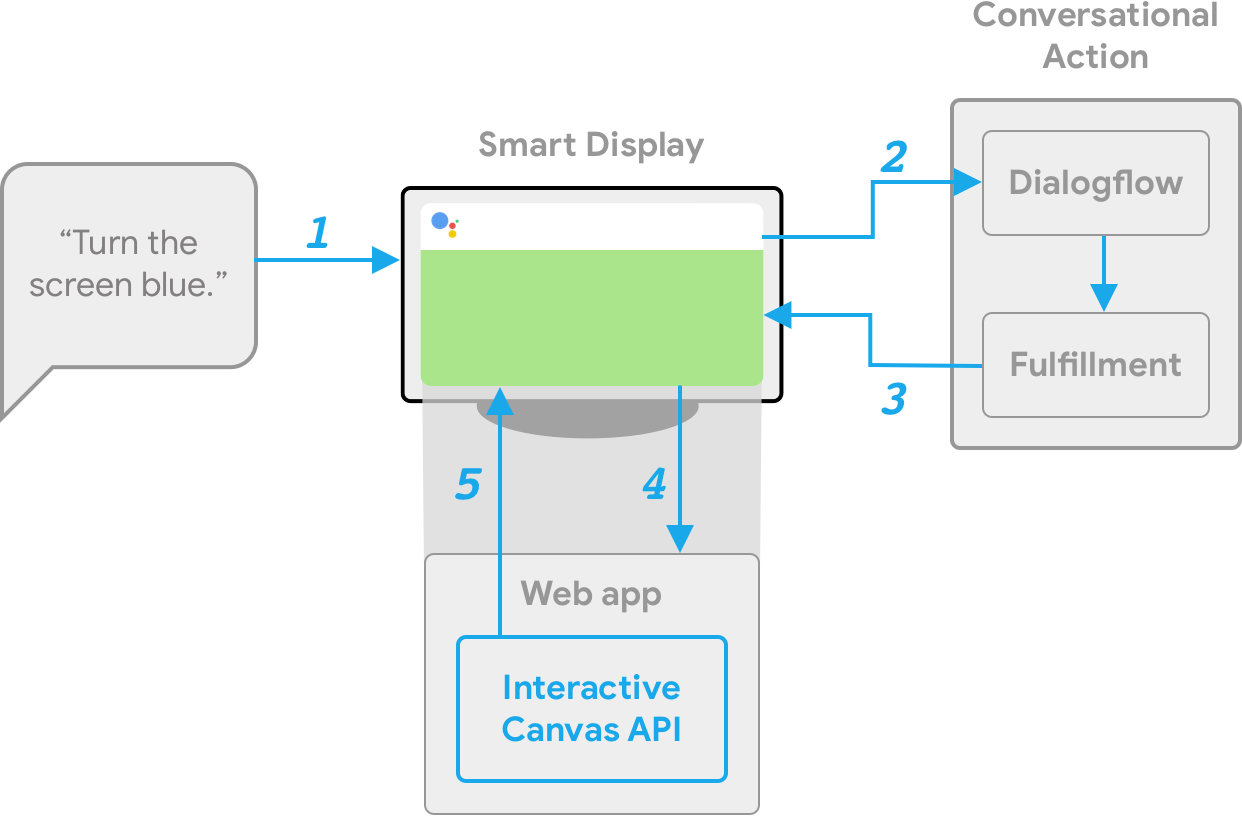

There are several components to an Action that uses Interactive Canvas:

- Conversational Action: An Action that uses a conversational

interface to fulfill user requests. Interactive Canvas Actions use web

views to render responses instead of rich cards or simple text and voice

responses. Conversational Actions use the following components:

- Dialogflow agent: A project in Dialogflow that you customize to converse with your Action users.

- Fulfillment: Code that is deployed as a webhook that implements the conversational logic for your Dialogflow agent and communicates with your web app.

- Web app: A front-end web app with customized visuals that your Action sends as a response to users during a conversation. You build the web app with web standards like HTML, JavaScript, and CSS.

The conversational Action and web app communicate with each other using the following:

- Interactive Canvas API: A JavaScript API that you include in the web app to enable communication between the web app and your conversational Action.

HtmlResponse: A response that contains a URL of the web app and data to pass it. You can use the Node.js or Java client libraries to return anHtmlResponse.

To illustrate how Interactive Canvas works, imagine a hypothetical Action called Cool Colors that changes the device screen color to a color the user specifies. After the user invokes the Action, the flow looks like the following:

- The user says

Turn the screen blueto the Assistant device. - The Actions on Google platform routes the user's request to Dialogflow to match an intent.

- The fulfillment for the matched intent runs and an

HtmlResponseis sent to the device. The device uses the URL to load the web app if it has not yet been loaded. - When the web app loads, it registers callbacks with the

interactiveCanvasAPI. Thedataobject's value is then passed into the registeredonUpdatecallback of the web app. In our example, the fulfillment sends anHtmlResponsewith adatathat includes a variable with the value ofblue. - The custom logic for your web app reads the

datavalue of theHtmlResponseand makes the defined changes. In our example, this turns the screen blue. interactiveCanvassends the callback update to the device.

Next steps

To learn how to build an Interactive Canvas Action, see the Build Overview page.

To see the code for a complete Interactive Canvas Action, see the sample.